At SC25, NVIDIA unveiled advances throughout NVIDIA BlueField DPUs, next-generation networking, quantum computing, nationwide analysis, AI physics and extra — as accelerated techniques drive the following chapter in AI supercomputing.

NVIDIA additionally highlighted storage improvements powered by the NVIDIA BlueField-4 knowledge processing unit, a part of the full-stack BlueField platform that accelerates gigascale AI infrastructure.

Extra particulars additionally got here on NVIDIA Quantum-X Photonics InfiniBand CPO networking switches — enabling AI factories to drastically cut back power consumption and operational prices — together with that TACC, Lambda and CoreWeave plan to combine them.

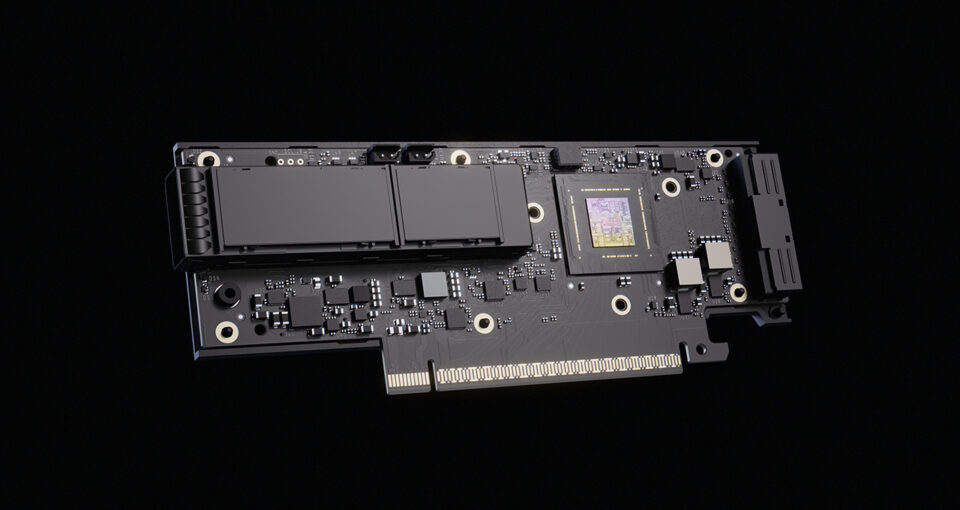

Final month, NVIDIA started delivery DGX Spark, the world’s smallest AI supercomputer. DGX Spark packs a petaflop of AI efficiency and 128GB of unified reminiscence right into a desktop kind issue, enabling builders to run inference on fashions as much as 200 billion parameters and fine-tune fashions domestically. Constructed on the Grace Blackwell structure, it integrates NVIDIA GPUs, CPUs, networking, CUDA libraries and the total NVIDIA AI software program stack.

DGX Spark’s unified reminiscence and NVIDIA NVLink-C2C ship 5x the bandwidth of PCIe Gen5, enabling sooner GPU-CPU knowledge alternate. This boosts coaching effectivity for giant fashions, reduces latency and helps seamless fine-tuning workflows — all inside a desktop kind issue.

NVIDIA Apollo Unveiled as Newest Open Mannequin Household for AI Physics

NVIDIA Apollo, a household of open fashions for AI Physics, was additionally launched at SC25. Utilized Supplies, Cadence, LAM Analysis, Luminary Cloud, KLA, PhysicsX, Rescale, Siemens and Synopsys are among the many trade leaders adopting these open fashions to simulate and speed up their design processes in a broad vary of fields — digital machine automation and semiconductors, computational fluid dynamics, structural mechanics, electromagnetics, climate and extra.

The household of open fashions harness the newest developments in AI physics, incorporating best-in-class machine studying architectures, similar to neural operators, transformers and diffusion strategies, with domain-specific data. Apollo will present pretrained checkpoints and reference workflows for coaching, inference and benchmarking, permitting builders to combine and customise the fashions for his or her particular wants.

NVIDIA Warp Supercharges Physics Simulations 🔗

NVIDIA Warp is a purpose-built open-source Python framework delivering GPU acceleration for computational physics and AI by as much as 245x.

NVIDIA Warp offers a structured strategy for simulation, robotics and machine studying workloads, combining the accessibility of Python with efficiency similar to native CUDA code.

Warp helps the creation of GPU-accelerated 3D simulation workflows that combine with ML pipelines in PyTorch, JAX, NVIDIA PhysicsNeMo and NVIDIA Omniverse. This permits builders to run complicated simulation duties and generate knowledge at scale with out leaving the Python programming surroundings.

By providing CUDA-level efficiency with Python-level productiveness, Warp simplifies the event of high-performance simulation workflows. It’s designed to speed up AI analysis and engineering by lowering boundaries to GPU programming, making superior simulation and knowledge technology extra environment friendly and extensively accessible.

Siemens, Neural Idea, Luminary Cloud, amongst others, are adopting NVIDIA Warp.

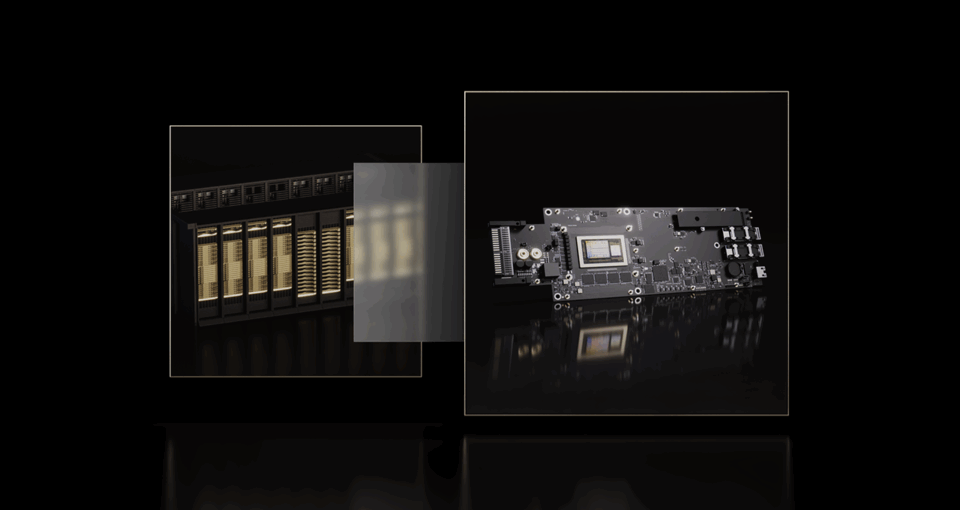

Showcasing BlueField-4 for Powering the OS of AI Factories 🔗

Unveiled at GTC Washington, D.C., NVIDIA BlueField-4 DPUs are powering the working system of AI factories. By offloading, accelerating and isolating important knowledge middle capabilities — networking, storage and safety — they release CPUs and GPUs to focus solely on compute-intensive workloads.

BlueField-4, combining a 64-core NVIDIA Grace CPU and NVIDIA ConnectX-9 networking, unlocks unprecedented efficiency, effectivity and zero-trust safety at scale. It helps multi-tenant environments, speedy knowledge entry, and real-time safety, with native integration of NVIDIA DOCA microservices for scalable, containerized AI operations. Collectively, they’re reworking knowledge facilities into clever, software-defined engines for trillion-token AI and past.

As AI factories and supercomputing facilities proceed to scale in measurement and functionality, they require sooner, extra clever storage infrastructure to handle structured, unstructured and AI-native knowledge for large-scale coaching and inference.

Main storage innovators — DDN, VAST Information and WEKA — are adopting BlueField-4 to redefine efficiency and effectivity for AI and scientific workloads.

- DDN is constructing next-generation AI factories, accelerating knowledge pipelines to maximise GPU utilization for AI and HPC workloads.

- VAST Information is advancing the AI pipeline with clever knowledge motion and real-time effectivity throughout large-scale AI clusters.

- WEKA is launching its NeuralMesh structure on BlueField-4, working storage companies immediately on the DPU to simplify and speed up AI infrastructure.

Collectively, these HPC storage leaders are demonstrating how NVIDIA BlueField-4 transforms knowledge motion and administration — turning storage right into a efficiency multiplier for the following period of supercomputing and AI infrastructure.

Adopting NVIDIA Co-Packaged Optics for Velocity and Reliability🔗

TACC, Lambda and CoreWeave unveiled that they are going to combine NVIDIA Quantum-X Photonics CPO switches into subsequent technology techniques as early as subsequent yr.

NVIDIA Quantum-X Photonics networking switches allow AI factories and supercomputing facilities to drastically cut back power consumption and operational prices. NVIDIA has achieved this fusion of digital circuits and optical communications at huge scale.

As AI factories develop to unprecedented sizes, networks should evolve to maintain tempo. By eliminating conventional pluggable transceivers, a standard explanation for job runtime failures, NVIDIA Photonics swap techniques not solely ship 3.5x higher energy effectivity, but additionally carry out with 10x larger resiliency, enabling functions to run 5x longer with out interruption.

At GTC 2024 in Silicon Valley, NVIDIA unveiled NVIDIA Quantum-X800 InfiniBand switches, purpose-built to energy trillion-parameter-scale generative AI fashions. These platforms ship a staggering 800Gb/s end-to-end throughput — 2x the bandwidth and 9x the in-network compute of their predecessors — owing to such improvements as SHARPv4 and FP8 help.

As NVIDIA Quantum‑X800 continues to be extensively adopted to satisfy the calls for of massive-scale AI, NVIDIA Quantum‑X Photonics, introduced at GTC earlier this yr, addresses the important energy, resiliency, and signal-integrity challenges of even bigger deployments. By integrating optics immediately on the swap, it eliminates failures attributable to pluggable transceivers and hyperlink flaps, enabling workloads to run uninterrupted at scale and making certain the infrastructure can help the following technology of compute-intensive functions as much as 5x higher than with pluggable transceivers.

“NVIDIA Quantum‑X Photonics represents the following step in constructing high-performance, resilient AI networks,” mentioned Maxx Garrison, product supervisor for cloud infrastructure at Lambda. “These advances in energy effectivity, sign integrity and reliability, might be key to supporting environment friendly, large-scale workloads for our prospects.”

SHARPv4 permits in-network aggregation and discount, minimizing GPU-to-GPU communication overhead. Mixed with FP8 precision, it accelerates coaching of trillion-parameter fashions by lowering bandwidth and compute calls for — delivering sooner convergence and better throughput and comes customary with NVIDIA Quantum‑X800 and Quantum‑X Photonics switches.

“CoreWeave is constructing the Important Cloud for AI,” mentioned Peter Salanki, co-founder and chief know-how officer at CoreWeave. “With NVIDIA Quantum-X Photonics, we’re advancing energy effectivity, and additional enhancing the reliability CoreWeave is understood for in supporting huge AI workloads at scale, serving to our prospects unlock the total potential of next-generation AI.”

The NVIDIA Quantum-X Photonics platform, anchored by the NVIDIA Quantum Q3450 CPO-based InfiniBand swap and ConnectX-8 SuperNIC, is engineered for the highest-performance environments that additionally require considerably decrease energy, larger resiliency and decrease latency.

Supercomputing Facilities Worldwide Adopting NVQLink

Greater than a dozen of the world’s high scientific computing facilities are adopting NVQLink, a common interconnect linking accelerated computing to quantum processors.

“Right here at Supercomputing, we’re asserting that we’ve been working with the supercomputing facilities worldwide which might be devoted and enthusiastic about constructing the following technology of quantum GPU, CPU GPU supercomputers, and easy methods to join them to their specific analysis space or deployment platform for quantum computing,” mentioned Ian Buck, vice chairman and basic supervisor of accelerated computing at NVIDIA.

NVQLink connects quantum processors with NVIDIA GPUs, enabling giant‑scale workflows powered by the CUDA‑Q software program platform. NVQLink’s open structure offers the important hyperlink supercomputing facilities must combine numerous quantum processors whereas delivering 40 petaflops of AI efficiency at FP4 precision.

Sooner or later each supercomputer will draw on quantum processors to broaden the issues they will remedy and each quantum processor will depend upon GPU supercomputers to run appropriately.

Quantum computing firm Quantinuum’s new Helios QPU was built-in with NVIDIA GPUs via NVQLink, attaining the world’s first actual‑time decoding of scalable qLDPC quantum error‑correction codes. The system maintained 99% constancy in contrast with 95% with out correction due to NVQLink’s microsecond low latencies.

With NVQLink scientists and builders achieve a common bridge between quantum and classical {hardware} — making scalable error correction, hybrid functions and actual‑time quantum‑GPU workflows sensible.

Within the Asia‑Pacific area, Japan’s International Analysis and Improvement Middle for Enterprise by Quantum-AI know-how (G-QuAT) on the Nationwide Institute of Superior Industrial Science and Know-how (AIST) and RIKEN Middle for Computational Science, Korea’s Korea Institute of Science and Know-how Info (KISTI), Taiwan’s Nationwide Middle for Excessive-Efficiency Computing (NCHC), Singapore’s Nationwide Quantum Computing Hub (a joint initiative of Singapore’s Centre for Quantum Applied sciences, A*STAR Institute of Excessive Efficiency Computing, and Nationwide Supercomputing Centre Singapore) — and Australia’s Pawsey Supercomputing Analysis Centre are among the many early adopters.

Throughout Europe and the Center East, NVQLink is being embraced by CINECA, Denmark’s DCAI, operator of Denmark’s AI Supercomputer, France’s Grand Équipement Nationwide de Calcul Intensif (GENCI), the Czech Republic’s IT4Innovations Nationwide Supercomputing Middle (IT4I), Germany’s Jülich Supercomputing Centre (JSC), Poland’s Poznań Supercomputing and Networking Middle (PCSS), the Know-how Innovation Institute (TII), UAE and Saudi Arabia’s King Abdullah College of Science and Know-how (KAUST).

In the US, main nationwide laboratories together with, Brookhaven Nationwide Laboratory, Fermi Nationwide Accelerator Laboratory, Lawrence Berkeley Nationwide Laboratory, Los Alamos Nationwide Laboratory, MIT Lincoln Laboratory, Nationwide Vitality Analysis Scientific Computing Middle, Oak Ridge Nationwide Laboratory, Pacific Northwest Nationwide Laboratory and Sandia Nationwide Laboratories are additionally adopting NVQLink to advance hybrid quantum‑classical analysis.

Creating Actual‑World Hybrid Functions

Quantinuum’s Helios QPU with NVQLink delivered:

- First actual‑time decoding of qLDPC error‑correction codes

- ~99% constancy with NVQLink correction vs ~95% with out

- Response time of 60 microseconds, exceeding Helios’ 1‑millisecond requirement by 16x

NVQLink unites quantum processors with GPU supercomputing for scalable error correction and hybrid functions. Scientists can achieve a single programming surroundings via CUDA‑Q APIs. Builders can construct and check quantum‑GPU workflows in actual time

With NVQLink the world’s supercomputing facilities are laying the inspiration for sensible quantum‑classical techniques, connecting numerous quantum processors to NVIDIA accelerated computing at unprecedented velocity and scale.

NVIDIA and RIKEN Advance Japan’s Scientific Frontiers

NVIDIA and RIKEN are constructing two new GPU‑accelerated supercomputers to broaden Japan’s management in AI for science and quantum computing. Collectively the techniques will function 2,140 NVIDIA Blackwell GPUs related via the GB200 NVL4 platform and NVIDIA Quantum‑X800 InfiniBand networking, strengthening Japan’s sovereign AI technique and safe home infrastructure.

- AI for Science System: 1,600 Blackwell GPUs will energy analysis in life sciences, supplies science, local weather and climate forecasting, manufacturing and laboratory automation.

- Quantum Computing System: 540 Blackwell GPUs will speed up quantum algorithms, hybrid simulation and quantum‑classical strategies.

The partnership builds on RIKEN’s collaboration with Fujitsu and NVIDIA to codesign FugakuNEXT, successor to the Fugaku supercomputer, anticipated to ship 100x better software efficiency and combine manufacturing‑degree quantum computer systems by 2030.

The 2 new RIKEN techniques are scheduled to be operational in spring 2026.

Arm Adopting NVIDIA NVLink Fusion 🔗

AI is reshaping knowledge facilities in a once-in-a-generation architectural shift, the place effectivity per watt defines success. On the middle is Arm Neoverse, deployed in over a billion cores and projected to succeed in 50% hyperscaler market share by 2025. Each main supplier — AWS, Google, Microsoft, Oracle and Meta — is constructing on Neoverse, underscoring its position in powering AI at scale.

To fulfill surging demand, Arm is extending Neoverse with NVIDIA NVLink Fusion, the high-bandwidth, coherent interconnect first pioneered with Grace Blackwell. NVLink Fusion hyperlinks CPUs, GPUs, and accelerators into one unified rack-scale structure, eradicating reminiscence and bandwidth bottlenecks that restrict AI efficiency. Related with Arm’s AMBA CHI C2C protocol, it ensures seamless knowledge motion between Arm-based CPUs and companions’ most well-liked accelerators.

Collectively, Arm and NVIDIA are setting a brand new customary for AI infrastructure, enabling ecosystem companions to construct differentiated, energy-efficient techniques that speed up innovation throughout the AI period.

“People constructing their very own ARM CPU, or utilizing an Arm IP can even have entry to NVLink Fusion, have the ability to join that ARM CPU to an Nvidia GPU or to the remainder of the NVLink ecosystem, and that’s taking place on the racks and scale-up infrastructure,” mentioned Buck.

Smarter Energy for Accelerated Computing

As AI factories scale, power is changing into the brand new bottleneck. The NVIDIA Area Energy Service (DPS) flips that constraint into a chance — turning energy right into a dynamic, orchestrated useful resource. Operating as a Kubernetes service, DPS fashions and manages power use throughout the information middle, from rack to room to facility. It permits operators to extract extra efficiency per megawatt by constraining energy intelligently, enhancing throughput with out increasing infrastructure.

DPS integrates tightly with the NVIDIA Omniverse DSX Blueprint, a platform for designing and working next-generation knowledge facilities. It really works alongside applied sciences like Energy Reservation Steering to stability workloads throughout the ability and the Workload Energy Profile Answer to tune GPU energy to the wants of particular jobs. Collectively, they kind DSX Enhance — an energy-aware management layer that maximizes effectivity whereas assembly efficiency targets.

DPS additionally extends past the information middle. With grid-facing APIs, it helps automated load shedding and demand response, serving to utilities stabilize the grid throughout peak occasions. The result’s a resilient, grid-interactive AI manufacturing facility that turns each watt into measurable progress.